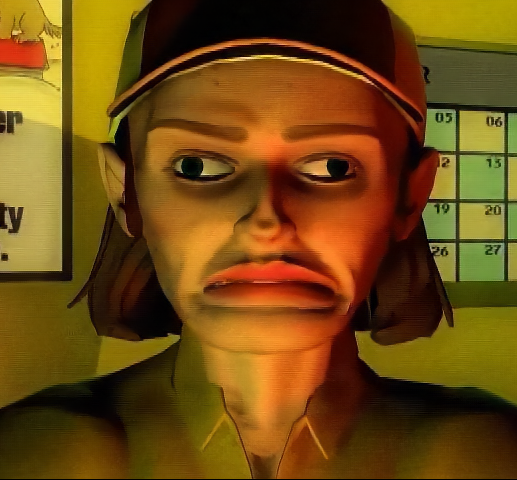

This probably isn’t a super-helpful answer, but for the most part, I haven’t needed to use any (yet?). Dunno if it’s just me, but pretty much every AI generated image still just looks “off” and uncanny in a perceptible and slightly off-putting way.

That said, there are occasional false positives depending on the lighting, focus, and filters used for legit photographs. No false negatives yet, though.

No false negatives yet, though.

Good old toupee fallacy

Some people were fooled by manual edits in Photoshop before this, so I’m sure there’s a gradient.

So far I’m with you, I can tell at least for now.

Yeah, not sure how long that’ll hold up for me, but for now, so far so good.

The rule-of-thumb used to be “look at the hands”, but I use a combo of focus, lighting, perspective, background objects (especially ones with text), color saturation, common sense (e.g. ‘could this even be remotely real?’), etc. The scary part is if someone would run that through a filter and present it like grainy CCTV footage, all that (minus the common sense part) would be lost and I’d likely be stumped.

No false negatives yet, though.

Can’t be sure of that. There may have been some that you didn’t suspect were AI, so you didn’t bother investigating.

For the most part you’re right. I can often catch them just by noticing mistakes, but we never know how many REALLY good ones slipped through the cracks.

Artist here. I don’t use any of those and use my eyes. Using AI to try and detect AI always rubs me the wrong way because the false positive rate is high. I’ve even seen Van Gogh paintings get wrongfully labeled as being AI-generated

I’ve even seen van gogh paintings get wrongly labeled

That’s way less surprising than an indie artist’s art being wrongly labelled. It’s nothing about the quality, just that van gogh paintings are likely to be very overrepresented in the training dataset

A thought: any ai-image detector is a defacto trainer for ai-image generators. It necessarily becomes a kind of arms race in the same way that spam generators test their payloads against spam filters.

I don’t feel like I need one. If it’s badly made I can usually tell with a high confidence that it’s AI and if it’s made so well that I can’t tell I generally don’t care either.

I don’t, and to be blunt, I don’t think that there is going to be any approach that is effective in the long run. I can think of many technical approaches, but it’s fundamentally playing a game of whack-a-mole; the technology is changing, so trying to identify flaws in it is hard. And false negatives may be a serious problem, because now you’ve got a website saying that something is a real photograph. Under some cases, it may be useful to identify a particular image as being generated, but I think that it will very much be an imperfect, best-effort thing, and to get harder over time.

I am pretty sure that we’re going to have a combination of computer vision software and generative AIs producing 3D images at some point, and a lot of these techniques go out the window then.

I’m also assuming that you’re talking images generated to look like photographs here.

-

Looking for EXIF metadata flagging the image as being AI-generated. Easiest approach, but this is only going to identify images that doesn’t have someone intentionally trying to pass off generated images as real.

-

It’s possible for a generated image to produce image elements that look very similar in its training set. Taking chunks of the image and then running TinEye-style fuzzy hashing on it might theoretically turn up some image that was in its training set, which would be a giveaway. I don’t know the degree to which TinEye can identify portions of images; it can do it to some degree. If I had to improve on Tineye, I’d probably do something like an edge-detection, vectorization, and then measure angles between lines and proportional distance to line intersections.

-

Identifying lighting issues. This requires computer vision software. Some older models will produce images with elements that have light sources coming from different directions. I’m pretty sure that Flux, at least, has some level of light-source identification run on its source material, else I don’t see how it could otherwise achieve the images it does.

-

Checking to see whether an image is “stable” with a given model. Normally, images are generated by an iterative process, and typically, the process stops when the image is no longer changing. If you can come up with exactly the same model and settings used to generate the image, and the person who generated the image ran generation until it was stable, and they’re using settings and a model that converge on a stable output, then an image being perfectly stable is a giveaway. The problem is that there are a vastly-proliferating number of models out there, not to mention potential settings, and no great way to derive those from the image. Also, you have to have access to the model, which won’t be the case for proprietary services (unless you are that proprietary service). You might be able to identify an image created by a widely-used commercial service like Midjourney, but I don’t think that you’re going to have as much luck with the huge number of models on civitai.com or similar.

-

One approach that was historically used to identify manipulated images was looking for images with image compression artifacts — you can see non-block-aligned JPEG compression artifacts, say. You might be able to do that with some models that have low quality images used as training data, but I’m skeptical that it’d work with better ones.

-

You can probably write software to look for some commonly-seen errors today, like malformed fingers, but honestly, I think that that’s largely trying to look for temporary technical flaws, as these will go away.

-

My eyes. Everything I’ve seen of AI detectors does not Inspire confidence in me. I don’t feel like I can trust their results due to the inevitability of incorrect guesses, so I don’t bother using them.

I use my wife. She’s an artist, and has an eye for such things.

Do you really need one at this point? AI images are really easy to notice.