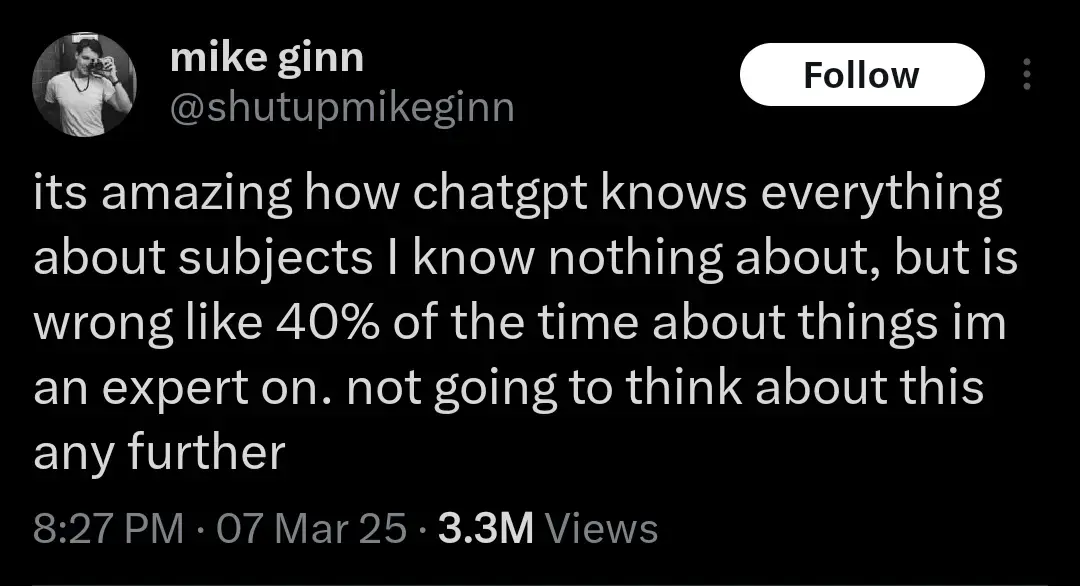

Another realization might be that the humans whose output ChatGPT was trained on were probably already 40% wrong about everything. But let’s not think about that either. AI Bad!

I’ll bait. Let’s think:

-there are three humans who are 98% right about what they say, and where they know they might be wrong, they indicate it

-

now there is an llm (fuck capitalization, I hate the ways they are shoved everywhere that much) trained on their output

-

now llm is asked about the topic and computes the answer string

By definition that answer string can contain all the probably-wrong things without proper indicators (“might”, “under such and such circumstances” etc)

If you want to say 40% wrong llm means 40% wrong sources, prove me wrong

It’s more up to you to prove that a hypothetical edge case you dreamed up is more likely than what happens in a normal bell curve. Given the size of typical LLM data this seems futile, but if that’s how you want to spend your time, hey knock yourself out.

Lol. Be my guest and knock yourself out, dreaming you know things

-

This is a salient point that’s well worth discussing. We should not be training large language models on any supposedly factual information that people put out. It’s super easy to call out a bad research study and have it retracted. But you can’t just explain to an AI that that study was wrong, you have to completely retrain it every time. Exacerbating this issue is the way that people tend to view large language models as somehow objective describers of reality, because they’re synthetic and emotionless. In truth, an AI holds exactly the same biases as the people who put together the data it was trained on.

The quote was originally on news and journalists.

The phenomenon is called Gell-Mann amnesia

deleted by creator

You didn’t, I did

The article discusses a study by OpenAI and MIT Media Lab revealing that heavy ChatGPT users — dubbed “power users” — are showing signs of addiction to the chatbot. These signs include preoccupation, withdrawal, mood changes, and loss of control. While most users don’t form emotional connections with ChatGPT, those who use it more frequently tend to view it as a “friend,” especially if they feel lonely or emotionally unfulfilled.

Interestingly, people using ChatGPT for personal reasons (like sharing emotions) showed less emotional dependency than those using it for non-personal tasks (like brainstorming). Also, text-based interactions led to more emotional language than voice interactions, with brief voice use correlating to better well-being. Overall, prolonged use — regardless of purpose — increased the risk of emotional dependence.

You said:

Make a list of the main points and for each explain why it will be worse when it is society wide

ChatGPT said:

Here’s a list of the main points from the article, along with why each could be more concerning on a society-wide scale:

Heavy ChatGPT users show signs of addiction (preoccupation, withdrawal, mood changes, loss of control): Why it's worse at scale: If large numbers of people become dependent on AI tools, especially to the point of addiction, it could lead to widespread mental health issues, reduced productivity, and a society increasingly unable to function without AI assistance. Long-term users tend to form emotional bonds with ChatGPT, sometimes seeing it as a “friend”: Why it's worse at scale: A society where many people substitute AI for real human connection could see rising loneliness, social detachment, and a decline in community and interpersonal relationships. This weakens social bonds and may impact mental health and civic cohesion. Lonelier individuals are more likely to use ChatGPT heavily and become emotionally attached: Why it's worse at scale: This could create a feedback loop where loneliness drives AI use, and AI use further isolates people from real-world interactions, exacerbating social alienation on a mass level. People stress out over subtle changes in ChatGPT’s behavior: Why it's worse at scale: If millions of people are emotionally reactive to minor algorithmic changes, this gives tech companies enormous influence over collective emotional states. It also creates societal instability, as shifts in AI behavior could unintentionally trigger widespread anxiety or confusion. Emotional language is used more in text interactions than voice, and brief voice use is associated with better well-being: Why it's worse at scale: If the most popular and accessible form of interaction (text) increases emotional entanglement, and the healthier option (brief voice use) is less utilized, we might unintentionally nudge society toward less emotionally healthy patterns of AI interaction. Users who engage with ChatGPT for non-personal tasks are more emotionally dependent than those who use it for personal reasons: Why it's worse at scale: This is counterintuitive and dangerous because it suggests people may not realize they're forming dependencies while doing routine work. If AI tools meant to boost productivity are quietly making users emotionally reliant, it could have long-term effects on workforce dynamics and mental resilience. Prolonged usage increases risk of emotional dependence, regardless of how it's used: Why it's worse at scale: As AI becomes embedded in everyday life, the likelihood of widespread emotional dependence increases. This could shift power dynamics between humans and AI, making people less autonomous and more easily influenced by algorithmic systems.Let me know if you want this formatted for a presentation, policy paper, or discussion.

That’s really interesting. Its output to this prompt totally ignored the biggest and most obviously detrimental effect of this problem at scale.

Namely, emotional dependence will give AI’s big tech company owners increased power over people.

It’s not as if these concepts aren’t widely discussed online, everything from Meta’s emotional manipulation experiments or Cambridge Analytica through to the meltdowns Replika owners had over changes to the algorithm are relevant here.

It’s the 4th point

Sort of but I think influence over emotional states is understating it and just the tip of the iceberg. It also made it sound passive and accidental. The real problem will be overt control as a logical extension to the kinds of trade offs we already see people make about, for example data privacy. With the Replika fiasco I bet heaps of those people would have paid good money to get their virtual love interests de-“lobotomized”.

I think this power to shape the available knowledge, removing it, paywalling it, based on discrimination, leveraging it, and finally manipulating for advertising, state security and personnal reason is why it should be illegal to privately own any ML/ AI models of any kind. Drive them all underground and only let the open ones benefit from sales in public.

What the fuck is vibe coding… Whatever it is I hate it already.

Using AI to hack together code without truly understanding what your doing

Andrej Karpathy (One of the founders of OpenAI, left OpenAI, worked for Tesla back in 2015-2017, worked for OpenAI a bit more, and is now working on his startup “Eureka Labs - we are building a new kind of school that is AI native”) make a tweet defining the term:

There’s a new kind of coding I call “vibe coding”, where you fully give in to the vibes, embrace exponentials, and forget that the code even exists. It’s possible because the LLMs (e.g. Cursor Composer w Sonnet) are getting too good. Also I just talk to Composer with SuperWhisper so I barely even touch the keyboard. I ask for the dumbest things like “decrease the padding on the sidebar by half” because I’m too lazy to find it. I “Accept All” always, I don’t read the diffs anymore. When I get error messages I just copy paste them in with no comment, usually that fixes it. The code grows beyond my usual comprehension, I’d have to really read through it for a while. Sometimes the LLMs can’t fix a bug so I just work around it or ask for random changes until it goes away. It’s not too bad for throwaway weekend projects, but still quite amusing. I’m building a project or webapp, but it’s not really coding - I just see stuff, say stuff, run stuff, and copy paste stuff, and it mostly works.

People ignore the “It’s not too bad for throwaway weekend projects”, and try to use this style of coding to create “production-grade” code… Lets just say it’s not going well.

source (xcancel link)

chatbots and ai are just dumber 1990s search engines.

I remember 90s search engines. AltaVista was pretty ok a t searching the small web that existed, but I’m pretty sure I can get better answers from the LLMs tied to Kagi search.

AltaVista also got blown out of the water by google(back when it was just a search engine), and that was in the 00s not the 90s. 25 to 35 years ago is a long time, search is so so much better these days(or worse if you use a “search” engine like Google now).

Don’t be the product.

Do you guys remember when internet was the thing and everybody was like: “Look, those dumb fucks just putting everything online” and now is: “Look at this weird motherfucker that don’t post anything online”

Remember when people used to say and believe “Don’t believe everything you read on the internet?”

I miss those days.

people tend to become dependent upon AI chatbots when their personal lives are lacking. In other words, the neediest people are developing the deepest parasocial relationship with AI

Preying on the vulnerable is a feature, not a bug.

I kind of see it more as a sign of utter desperation on the human’s part. They lack connection with others at such a high degree that anything similar can serve as a replacement. Kind of reminiscent of Harlow’s experiment with baby monkeys. The videos are interesting from that study but make me feel pretty bad about what we do to nature. Anywho, there you have it.

And the amount of connections and friends the average person has has been in free fall for decades…

I dunno. I connected with more people on reddit and Twitter than irl tbh.

Different connection but real and valid nonetheless.

I’m thinking places like r/stopdrinking, petioles, bipolar, shits been therapy for me tbh.

At least you’re not using chatgpt to figure out the best way to talk to people, like my brother in finance tech does now.

Its too bad that some people seem to not comprehend all chatgpt is doing is word prediction. All it knows is which next word fits best based on the words before it. To call it AI is an insult to AI… we used to call OCR AI, now we know better.

New DSM / ICD is dropping with AI dependency. But it’s unreadable because image generation was used for the text.